1999

First presented at the “That Media Thing” symposium in October 1999

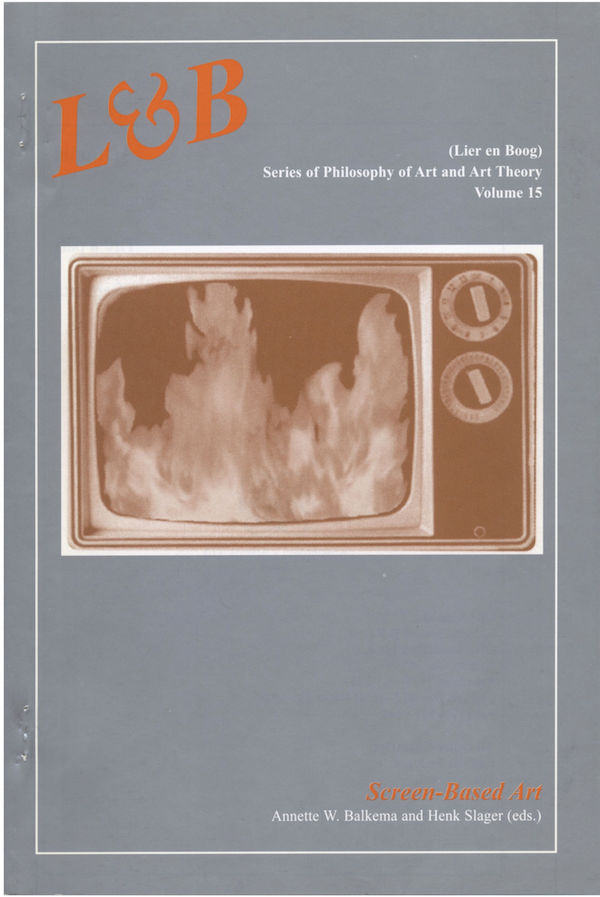

Published in Lier en Boog Series of Philosophy of Art and Art Theory, Volume 15, Screen-Based Art

Composing images

1999

A PDF file of the L&B publication can be downloaded here.

A tape I produced almost a year ago is a single channel work entitled Ephèmerios. Ephemeros is the Greek root of ephemeral, a word which occurs in many European languages in rather identical forms. Like ephemeral, ephemeros means “short-lived, existing or continuing for a short time only”. The form ephemerios, however, was also used by the ancient Greek poets for complaining about life’s fleetingness.

The tape Ephèmerios has been shown for the first time at the French-Baltic-Nordic Video Festival in Tallinn, Estonia, 1998. Since then, it has been shown at several other festivals, including Amsterdam’s 1999 World Wide Video Festival. The Amsterdam World Wide Video Festival catalogue said about the work:

“The artist forces the viewer to watch and while watching not to concentrate on what is being seen. In this landscape one can let the imagination take over, stop paying attention and dream on, dream of that island, far away.”

Needless to say I was not too thrilled with an interpretation of the work whereby the spectator is invited “not to concentrate on what is being seen.”

Another showing of the tape was in a presentation entitled “Beyond Reality”, part of the 1999 series “De Avonduren” by the Montevideo Dutch Institute of Media Art in the suB-K gallery in Utrecht. In this presentation, several works by different artists were grouped under the topic “changed/altered realities”. The participating artists were invited to attend this event since the aim was to have an open discussion between artists and audience. I ended up in a discussion on the question of whether or not my tape features an island and whether or not “it is about an island”. Well, to me it is not.

To me, the screen is one of the output areas of the machine behind it, whether that machine is the computer or the video-recorder. What is visible on the screen or what is heard through the loudspeakers is a result of the machine’s processes and processing. In other words, what is visible on the screen is not the image of the object the image was taken from, which is much like René Magritte’s “This Is Not A Pipe” – a notion that still seems to be alien to most of the world of screen-based art today. Usually, a pipe on a video screen or computer monitor is considered an object that features in a storyline. However, such a consideration is a very filmic interpretation of a video screen and historically incorrect as well.

From the start, the development of film was driven by the desire to reproduce movement, whereas in video – or television, from which video developed – the desire to reproduce movement was superseded by the desire to conquest distance. Thus in video, image fidelity has always been sacrificed for the sake of conquering distance. Consequently, film has ended up being a very passive medium capable only of movement recording and movement playback, while video, because of its technology, allows for a wide range of post-production processing. I believe that ignoring the difference in these technologies in favour of one interpretation for all screen-based media means ignoring the medium. Such a limited interpretation could be compared with a similarly unlikely claim that the transition from tempera to oil paint had no effect whatsoever on the history of painting.

Like René Magritte’s image of a pipe, the image in Ephèmerios has nothing to do with an island, Indeed, an island was shot, but in being transferred onto videotape, the island was also transformed into something else. It turned into a continuous waveform of electronic values or a stream of digital values – depending on whether you consider video to be analogue electronic or digital data – representing the subsequent video scan-lines and video frames.

A very interesting and painfully true observation about digital video was made by Miller Puckette, who developed the MAX software at IRCAM. He said that

“digital video is currently in a similar state electronic music was in in the 1940’s.”

So far, digital video has only been concerned with cutting virtual tape and sticking the pieces back together again. That is exactly what electronic music did in the 1940’s.

Most computer software currently in use by visual artists employ interface metaphors based on analog, real world predecessors. In Photoshop, for example, the screen area where one paints is still called a canvas and painting itself is still done with brushes and pencils that replicate real world brush and pencil prints. For years there has been a war among different software companies for who could write the best plugin for charcoal with the sole purpose of replacing real world charcoal. For video software the situation is not much different, since video software still deals with strips of film stored in bins while cuts are made by virtual scissors. The implications of Marshall McLuhan’s statement,

“First we shape our tools, thereafter they shape us”

, seems to have become all too clear. Thus, a development like the one in electronic music over the last few decades where electronics have sparked new instruments, new forms of music and new modes of interaction between performer, instrument and audience, which could not have existed otherwise, still has to take place in digital video. Some computer software which could effect that is emerging though most of it is still heavily under development. In the past eight or nine years, I have done a huge amount of online video-editing, mainly for television. I have edited almost everything from small-scale local news items to lengthy documentaries – some of which were shot over a period of three to ten years and broadcast in five or six different countries – but also many television commercials and the occasional music video. The online edit room is almost home to me, not only in amount of time spent there, but more so in therms of technology and content production.

The experience I accumulated over the years has led to a situation where the decision-making process, upon which this kind of storytelling, traditional to television – and by extension to film upon which television is so eager to lean – has become much like a reflex to me.

Knowing television that well also means being aware of its limitations. It is this kind of storytelling I refer to when I talk about our habits of perceiving what is on screen as props and actors and what I consider traditional video/film narrative. In complete coherence with oral storytelling, but largely ignoring the fact that screen-based media are audiovisual media. Personally I have always felt that video has much more in common with music than with film.

Somehow a great deal of the process of making a video is similar to composing music. Moreover, since in my videos a pipe is not a pipe anyway, I have long lost the narrative storyline which, in my view, film is all about. Interestingly and strikingly enough, almost all efforts toward developing new computer software which could enable new ways of processing video almost all stem from the field of music. Some of the most important one’s, I believe, would include Miller Puckette’s Pd, a descendant of his MAX/MSP software that has already revolutionized electronic music in the past decade. For MAX/MSP Netochka Nezvanova of 0f003+punktprotokol developed the nato.0+55 object for live video. But certainly also software like Image/ine which is developed by Tom Demeijer at STEIM, the Studio for Electro-Instrumental Music in Amsterdam, fits into this category.

In music, synthetically generated sounds and, more specifically, sounds no longer referring to existing real world instruments have been around and accepted for decades. Many different sound synthesis theories – ranging from FM synthesis to Granular synthesis, to Modal synthesis, and many others – exist, as well as does the software to implement them. Each of these theories and their software implementation is more or less suited for a specific type of sound, depending on the specific qualities of that sound and the desired transformations. In sound synthesis and analysis, sound is thought of a group of frequencies, usually a fundamental frequency with harmonics and a temporal envelope describing its temporal development. In other words, in the digital domain sound is considered in terms of physical properties rather than in terms of analog technologies. In general, these software packages do not aim at substituting for real world instruments. As one of the inventors of the synthesizer, Robert Moog, said about the early days of the synthesizer:

“People outside electronic music thought of them – the synthesizers – as replacements for analog instruments while people in electronic music were much more interesting in using them to create new sounds.”

Similar to how a composer decides on the use of an instrument based on sound properties such as pitch, harmonics, and spectrum, I select my images. What has intrigued me for a long time now is that such an attitude is perfectly acceptable when one is a composer or working with sound. However, as soon as one starts working visually and selects images rather than instruments, the image is at once no longer interpreted based on its image qualities, but interpreted as the representation of an object. Such an object has a meaning, is a character or a prop in a storyline and even functions in a metaphorical, metaphysical, and philosophical statement or theme. A pipe is always a pipe and as a pipe it is a metaphor for something else, is the traditional view. In the context of the art world, this is exactly the quality of the image I am not interested in.

Conversely, I am fascinated by the qualities of the image based on intensities of luma and chroma – light and color – and their shapes and temporal development. Thus, the image of the island in Ephèmerios interests me because of its quality of ambiguity: disappearing into the mist and emerging from it again, being an image and being noise at the same time, and going back and forth between these states in a natural way.

The image already had this ambiguous quality when I shot it. It appeared as noise or mist, and changed and reshaped its form. By processing the image, I reshaped that quality into a temporal structure, making it more accessible. But I also added to it by employing the image-data expressing this quality and by manipulating the data through speeding up and slowing down. I ultimately convoluted multiple layers of the same image at different speeds and different positions in time and, thus, in this way, I used the very image itself to generate the final tape. It is the convolution of the ephemeral quality of the video image that expresses the ephemerality already present in the original image through its continuous disappearing into noise and reappearing from it. That takes the image to its very limits: the limits of the monitor, the limits of video, and the grey area between image and noise. It is the amplification and the restructuring of the very parameters that struck me in the original footage.

All this also applies to Videogram, a project I am currently working on. Videogram consists of a series of smaller installations where wall-mounted LCD projectors project a video image onto a sheet of perspex hung at about 1.5 meters from the wall. Each projection has its specific perspex screen and its specific continuous video loop, but every set-up of the installation could imply any number of such smaller units in any combination.

The title Videogram is derived from the Latin “video” – I see – and the Greek “gram” – I write. So, Videogram means “I see, therefore I write“. In physics and quantum mechanics, the act of watching defines and modifies what is seen. Something similar happens in this installation. In video images of this kind of abstraction, the viewer’s attitude while watching the screen seems to be of more importance than anything else in defining what and how the viewer will experience what is on screen. In video, this phenomenon seems to have more impact than in any other medium and generally the importance of this is ignored or simply not understood. Part of that is caused by the fleeting or ephemeral quality of video. A single video image simply takes slightly longer to write to the screen than it takes for the phosphorus to die out again. So, a full frame of video never exists. For a video image, there is no such things as returning, reconsidering or a second look as is the case with paintings. On the contrary, the video image is seen, taken in, and experienced in less than real time since the image already disappears while it is still being written. This is not only demanding on the viewer, but also limits what can be expressed in a given amount of video time. There is always a certain tension between these two aspects. For example, if one watches the Videogram videos in an unattached way and simply looks for global overviews and global changes, one would see a radically different video than if one allowed oneself to be immersed in tiny little details. Then the viewer would go from one part of the screen to another and discover something new and different every time one visited the installation.

The images used for Videogram are selected solely on the basis of their image properties such as intensities of light and color, shapes, movement over time and so on. The first series I did was all shots of flowers and blossoms for the obvious reasons of light and color. At the moment I am also working with other images such as landscapes. Different processing techniques appear to be suitable for different images, similar to how specific sounds suit different analysis and different re-synthesis techniques. The kind of processing applied to these images are all based on the direct parameter values of the images themselves, i.e. the values for the color components that define the image’s light and color intensities. By convoluting one image with another at a different speed and at different times, the two images generate a third on; one that has properties of both images but is at the same time different from both source images. Such an image that can only originate from this process could never come into being outside the computer and its output area: the screen.

A similar approach to this I have taken in an as-yet untitled installation I am currently working on. This installation consists of a video-image – either a projection or a large LCD video-screen fitted on the wall – showing an image of the exhibition space, as shot by a small video camera installed directly beneath that video image. The camera captures a single frame of video at regular short intervals. These images are compared to a computer-stored image of the empty exhibition space. Only the changes between the two are extracted. These differences between the image of the empty space and the sequence of images of the exhibition space as visitors walk through are accumulated in order to from a single new image, one that compiles everything that moves and changes in that space. This accumulation of changes is then used to modulate the video image of the empty gallery space in the projection. The two images are convolved and in this way the changes inside the exhibition space – its fluidity, its ephemerality – are stored and used to modulate the space itself, revealing a map which shows the use of the space by the visitors and reveals the network of interactions between the space and its users.

Both Videogram and its installation do not use sound, but in their manipulation of the video-image, in its convolution, they lean heavily on theories and techniques from the world of electronic music. I am interested in the convergence of video and sound, not in a synesthetic way, but as an exchange and a crossbreeding of ideas and their implementations. Since by now both music and video rely heavily on the computer – more often than not the exact same machine – this implies that both fields share the same underlying mathematics.

To what extend could algorithms developed for implementation of a specific theory for either video or music be applicable to both fields? The new crop of software I mentioned before is bridging this gap and rapidly surfacing at this moment. It makes it a very interesting and very promising field of exploration.

P.S. At about the time of the symposium, the San Francisco based software company Synthetik Software Inc. released version 1.0 of its commercial graphics software “Studio Artist”. The company advertises it as a “Graphics Synthesizer”. The software takes metaphors from music synthesis and applies those metaphors to computer graphics – both for stills and video – not only as part of the interface, but especially in the different painting modes the program offers, many of which had not been available for computer graphics before. While the art world is still involved in a race to embrace and appropriate video and new media into its structures and still has about twenty-five or so years of video art history to catch up on, history repeats itself.

Quotations

This text was quoted in “Live Cinema: Designing an Instrument for Cinema Editing as a Live Performance” (local copy / pdf) by Michael Lew and presented at NIME 2004.